There’s a growing, bipartisan fantasy that if we force the internet to check everyone’s ID at the door, kids will be safe and society will be tidy again. It’s comforting theater—security cosplay with a side of moral panic.

This is the internet we’re talking about — a network built to resist control. As John Gilmore of the Electronic Frontier Foundation famously said, “The internet treats censorship as damage and routes around it.” In other words, any law that tries to stop kids from seeing certain content, or to police adult material at scale, will inevitably lag behind the technology curve. Worse, it restricts the law-abiding while making bad actors more agile.

Mashable’s recent piece on age verification captures the scope creep: what began as “keep porn away from kids” is metastasizing into ID checks across vast swaths of the web. In the U.S., state laws are colliding with platform policies; in the U.K., the Online Safety Act is already reshaping traffic patterns and pushing users toward VPNs or smaller, less accountable sites. The pattern is familiar: when rules are vague and penalties are huge, platforms over-comply, lawful speech gets caught in the blast radius, and kids still find the exits.

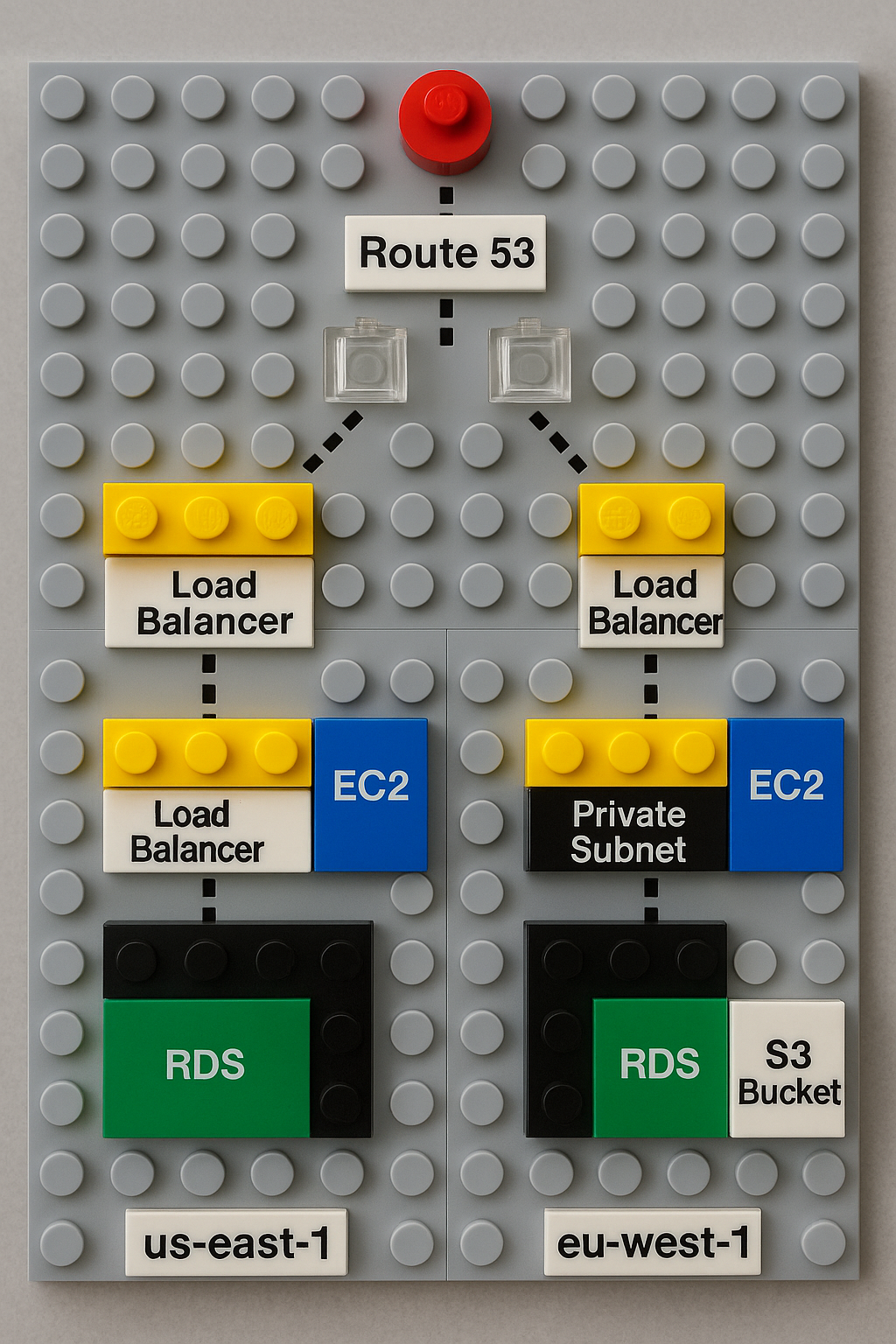

As a neurodivergent leader who lives in systems, not slogans, I’m allergic to one-shot fixes that create bigger problems downstream. “Verify everyone’s age” sounds simple until you diagram the system: identity databases, third-party vendors, face scans, data retention, breach surfaces, false positives that lock out marginalized youth, and chilling effects on those seeking help for mental health, sexuality, or abuse. Kids don’t disappear; they route around damage. So do predators.

What the current age-verification wave is actually doing

Platforms, lawmakers, and well-meaning parents alike are trying to plug a river with a thimble. The internet as stated previously, “treats censorship as damage and routes around it.” These regulations create friction for responsible users, while predators and malicious actors find new paths to reach victims — making the system inherently reactive, inefficient, and often counterproductive.

- Driving measurable behavior changes—with side effects. After the U.K.’s new rules kicked in, major adult sites reported steep traffic drops from the U.K. and a corresponding surge in VPN use. That’s not safety; it’s displacement. And displacement without education usually means less visibility and fewer guardrails.

- Inviting over-removal and preemptive censorship. When “protect kids from harm” is undefined but fines are existential, platforms remove first and ask questions never. That sweeps up political speech, historical content, and the kind of peer-to-peer knowledge kids actually use to stay safe.

- Shifting liability into instant bans, not investigations. Platforms under pressure to “do something” about CSAM often default to account deletion without ever verifying the reports. That shortcut isn’t safety — it’s cover-your-ass compliance. Worse, bad actors know this: mass-reporting has become a weapon against marginalized communities, especially LGBTQIA+ users who share perfectly lawful content about identity, sexuality, or youth support. The result? Queer teens lose lifelines, predators remain, and the system rewards false positives while discouraging appeals.

- Expanding surveillance infrastructure. Real-ID uploads and AI face estimation centralize sensitive data that can be breached, misused, or repurposed. Building surveillance to maybe block a 14-year-old from a website is a lousy trade.

⚠️ Weaponized Reporting: A Hidden Attack Vector

On paper, mandatory content moderation looks like protection. In practice, false reporting has become a blunt weapon. Coordinated brigades mass-flag LGBTQIA+ creators and communities, triggering automatic bans for “CSAM” or “adult content” even when posts are lawful, supportive, and essential for at-risk youth.

Because platforms fear fines more than false positives, they remove first and rarely investigate. For marginalized teens, this doesn’t reduce risk — it isolates them further, cutting them off from identity-affirming spaces while predators adapt elsewhere. When enforcement itself is an attack vector, safety isn’t safety. It’s silencing.

“But think of the children.” I am. Here’s what actually helps.

- Treat online safety as a literacy, not a ‘one and done’ step. Kids need education in spotting grooming, social engineering, and boundary-setting. Universal, age-appropriate digital literacy in schools — plus parent training that isn’t condescending — works. Pair it with counselors who actually understand online dynamics.

- Default-private, context-aware accounts for minors. Youth accounts should ship requiring DM approval, friction for new contacts, resettable visibility controls, and explainable nudges. Protect outcomes, not birthdays.

- Pro-user, privacy-preserving age assurance where it’s truly necessary. Use on-device or zero-knowledge checks for narrow cases (like commercial porn) that prove “over 18” without storing ID. If a vendor can’t guarantee no retention, they don’t get the contract.

- Target predators, not the whole population. Fund covert ops, cross-border takedowns, and faster lawful requests. Don’t conscript apps to break encryption — resource investigators properly.

- Hard consequences for dark-pattern growth. Platforms that funnel minors toward adult spaces or sexual content should face liability. Audit algorithms; publish pathways.

- Platform-side 48-Hour lockdowns. When a report is filed, the accused account goes into temporary “invisible” mode: no new connections, no discoverability, and limited interactions until moderation investigates. It’s a pressure valve that protects kids from ongoing harm without permanently punishing users if the report turns out false.

- Measure what matters. Time-to-takedown, recidivism of banned accounts, rates of unsolicited contact, and successful appeals. If a law or feature can’t show progress here, it’s theater.

The policy trap to avoid

We’ve been here before. COPPA (2000) pushed services to lock out under-13s, which didn’t stop kids from using the internet; it just trained them to lie about their birthday and pushed them into unsupervised corners. When we regulate by pretending kids don’t exist online, we create harm and then congratulate ourselves for lowering numbers on a dashboard.

Today’s wave of age-verification laws risks repeating that mistake at globall scale — only now with biometric databases and broader collateral damage to speech, research, and vulnerable communities who rely on anonymity. Even when well-intentioned, these mandates expand surveillance while leaving predators nimble. That’s not safety; that’s optics.

A better north star

When I think about “protecting kids,” I think like an SRE: reduce blast radius, build graceful degradation, and design for how humans actually behave under stress. Kids need skills, defaults, and exits. Parents need tools that aren’t punishment in disguise. Investigators need resources, not backdoors. And all of us need an internet that remains accessible, inclusive, and—yes—messy enough to be human.

We can choose literacy over lockdown, audits over IDs, and targeted enforcement over mass surveillance. If lawmakers insist on doing something, let’s insist it be something that works — not another round of “safety theater” that the internet will inevitably route around.

Sources & Further Reading

- Mashable – overview of the post-SCOTUS/UK landscape and the sprawl of age-verification mandates.

- Financial Times – early U.K. impact data: traffic drops and VPN workaround.

- The Verge – SCOTUS allowing a state age-gating law to take effect pending litigation.

- Washington Post – on over-removal and censorship pressures under the U.K. regime.

- Internet Society – technical critique of ID-based age checks weakening privacy/security.

- EFF – civil-liberties analysis of age-verification and risks for teens.

- UK Gov explainer – what the Online Safety Act claims to do (for comparison).

- COPPA history – background on how “just keep kids out” approaches backfire.