Why This Exists

If you’re like me—a technology leader with ADHD—you know the promise of AI tools often clashes with the reality of trying to use them in a sustainable, ethical, and mentally supportive way.

The tools promise clarity, productivity, and creative acceleration.

But too often, what they deliver is:

- A firehose of new apps, each demanding time and attention we don’t have.

- “Productivity porn” that assumes neurotypical focus and workflow.

- Ethical ambiguity, with tools trained on stolen labor, biased data, and exploitative practices.

- And a creeping pressure to hand over everything—even our humanity.

I want a better way. I want tools that respect people, not replace them. I want systems that support how neurodivergent brains actually work, not force us to mask or conform.

So today, I’m starting with a declaration.

Not a sales pitch. Not a product launch.

A manifesto.

Because before we choose our tools, we need to choose our principles.

The Ethical AI Manifesto (for Humans Who Think Differently)

- We lead with people, not prompts.

AI must amplify human potential, not override it. Our judgment, values, and lived experiences are non-negotiable parts of the process. - We optimize for clarity, not conformity.

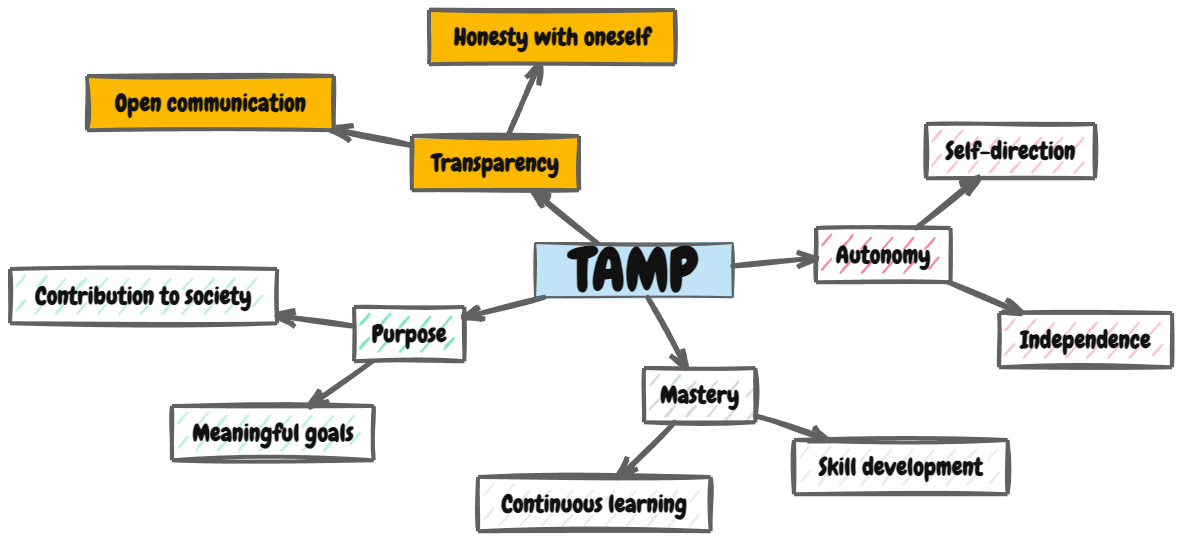

Human minds don’t always work in straight lines. The best systems are ones that bend, flex, and adapt—not ones that enforce sameness. - We demand transparency.

We will choose tools that are open about how they’re trained, what data they use, and how our inputs are stored and monetized. No black boxes. - We reject exploitation in all forms.

We do not use or support AI models built on the unpaid labor of artists, writers, or marginalized communities. If it can’t pass an ethical audit, it doesn’t belong in our stack. - We prioritize sustainability—mental and environmental.

We won’t trade human burnout for machine burnout. We acknowledge the energy costs of AI and choose tools that align with a regenerative future. - We treat AI as collaborator, not crutch.

We don’t outsource our integrity, creativity, or responsibility. AI can draft. We decide. - We build for neurodiverse minds, not against them.

We champion workflows that reduce friction, not just increase output. We experiment, adapt, and document—so others like us can thrive. - We use technology to create time—not consume it.

The goal isn’t to do more. The goal is to do less, better. With space to think. With room to breathe.

Why This Matters Now

As AI rapidly integrates into every corner of knowledge work, the risk is clear: Tools are being built faster than principles are being defined.

And if we don’t define those principles now, someone else will. Probably someone who’s never known the brain fog of ADHD. Or the exhaustion of masking in a corporate setting. Or the moral discomfort of benefiting from technologies that quietly exploit the very people they claim to help.

We don’t need “more productivity.”

We need better integrity.

We need healthier systems.

We need human-centered AI for non-neurotypical humans.

What’s Next

This manifesto isn’t a conclusion. It’s a beginning.

I’ll be building on these principles in future posts—diving into:

- What ethical AI workflows can look like for leaders.

- Tools that honor these values (and ones that don’t).

- How to build your own ADHD-friendly “human-first” productivity stack.

But I want to hear from you, too.

What’s missing? What resonates? What tools are you using—or avoiding—and why?

We build better when we build together.

— Roger

TL;DR (because I get it)

- AI should support, not replace, human judgment—especially for neurodivergent leaders.

- We need tools and systems grounded in ethics, accessibility, and authenticity.

- This manifesto outlines the principles we’ll use to guide AI adoption—starting now.

One thought on “My Perspective On the Ethical Usage of AI and AI Tools”

Comments are closed.